It is an all too common feeling, that sinking feeling that leads to the phrase “Oh Crap” being muttered under your breath. You just spent almost a year getting management to pay for a new compute workstation, server or cluster. You did the ROI and showed an eight-month payback because of how much faster your team’s runs will be. But now you have the benchmark data on real models, and they are not good. “Oh Crap”

It is an all too common feeling, that sinking feeling that leads to the phrase “Oh Crap” being muttered under your breath. You just spent almost a year getting management to pay for a new compute workstation, server or cluster. You did the ROI and showed an eight-month payback because of how much faster your team’s runs will be. But now you have the benchmark data on real models, and they are not good. “Oh Crap”

Although a frequent problem, and the root causes are often the same, the solutions can very. In this posting I will try and share with you what our IT and ANSYS technical support staff here at PADT have learned.

Hopefully this article can help you learn what to do to avoid or circumvent any future or current pitfalls if you order an HPC system. PADT loves numerical simulation, we have been doing this for twenty years now. We enjoy helping, and if you are stuck in this situation let us know.

Wall Clock Time

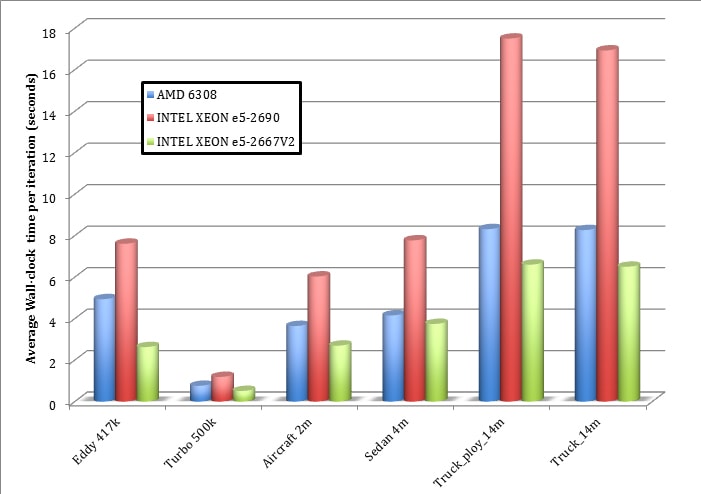

It is very easy to get excited about clock speeds, bus bandwidth, and disk access latency. But if you are solving large FEA or CFD models you really only care about one thing. Wall Clock Time. We cannot tell you how many times we have worked with customers, hardware vendors, and sometimes developers, who get all wrapped up in the optimization of one little aspect of the solving process. The problem with this is that high performance computing is about working in a system, and the system is only as good as its weakest link.

We see people spend thousands on disk drives and high speed disk controllers but come to discover that their solves are CPU bound, adding better disk drives makes no difference. We also see people blow their budget on the very best CPU’s but don’t invest in enough memory to solve their problems in-core. This often happens because when they look at benchmark data they look at one small portion and maximize that measurement, when that measurement often doesn’t really matter.

The fundamental thing that you need to keep in mind while ordering or fixing an HPC system for numerical simulation is this: all that matters is how long it takes in the real world from when you click “Solve” till your job is finished. I bring this up first because it is so fundamental, and so often ignored.

The Causes

As mentioned above, an HPC server or cluster is a system made up of hardware, software, and people who support it. And it is only as good as its weakest link. The key to designing or fixing your HPC system is to look at it as a system, find the weakest links, and improve that links performance. (OK, who remembers the “Weakest Link” lady? You know you kind of miss her…)

In our experience we have found that the cause for most poorly performing systems can be grouped into one of these categories:

- Unbalanced System for the Problems Being Solved:One of the components in the system cannot keep up with the others. This can be hardware or software. More often than not it is the hardware being used. Let’s take a quick look at several gotchas in a misconfigured numerical simulation machine.

- I/O is a Bottleneck

Number crunching, memory, and storage are only as fast as the devices that transfer data between them. - Configured WrongOut of simple lack of experience the wrong hardware is used, the OS settings are wrong, or drivers are not configured properly.

- Unnecessary Stuff Added out of FearPeople tend to overcompensate out of fear that something bad might happen, so they burden a system with software and redundant hardware to avoid a one in a hundred chance of failure, and slow down the other ninety-nine runs in the process.

Avoiding an Expensive Medium Performance Computing (MPC) System

The key to avoiding these situations is to work with an expert who knows the hardware AND the software, or become that expert yourself. That starts with reading the ANSYS documentation, which is fairly complete and detailed.

Often times your hardware provider will present themselves as the expert, and their heart may be in the right place. But only a handful of hardware providers really understand HPC for simulation. Most simply try and sell you the “best” configuration you can afford and don’t understand the causes of poor performance listed above. More often than we like, they sell a system that is great for databases, web serving, or virtual machines. That is not what you need.

A true numerical simulation hardware or software expert should ask you questions about the following, if they don’t, you should move on:

- What solver will you use the most?

- What is more important, cost or performance? Or better: Where do you want to be on the cost vs. performance curve?

- How much scratch space do you need during a solve? How much storage do you need for the files you keep from a run?

- How will you be accessing the systems, sending data back and forth, and managing your runs?

Another good test of an expert is if you have both FEA and CFD needs, they should not recommend a single system for you. You may be constrained by budget, but an expert should know the difference between the two solvers vis-à-vis HPC and design separate solutions for each.

If they push virtual machines on you, show them the door.

The next thing you should do is step back and take the advice of writing instructors. Start cutting stuff. (I know, if you have read my blog posts for a while, you know I’m not practicing what I preach. But you should see the first drafts…) You really don’t need huge costly UPS’, the expensive archival backup system, or some arctic chill bubbling liquid nitrogen cooling system. Think of it as a race car, if it doesn’t make the car go faster or keep the driver safe, you don’t need it.

A hard but important step in cutting things down to the basics is to try and let go of the emotional aspect. It is in many ways like picking out a car and the truth is, the red paint job doesn’t make it go any faster, and the fancy tail pipes will look good, but also don’t help. Don’t design for the worst-case model either. If 90% of your models run in 32GB or RAM, don’t do a 128GB system for that one run you need to do a year that is that big. Suffer a slow solve on that one and use the money to get a faster CPU, a better disk array, or maybe a second box.

Fixing the System You Have

As of late we have started tearing down clusters in numerous companies around the US. Of course we would love to sell you new hardware however at PADT, as mentioned before, we love numerical simulation. Fixing your current system may allow you to stretch that investment another year or more. As a co-owner of a twenty year old company, this makes me feel good about that initial investment. When we sick our IT team on extending the life of one of our systems, I start thinking about and planning for that next $150k investment we will need to do in a year or more.

Breathing new life into your existing hardware basically requires almost the same steps as avoiding a bad system in the first place. PADT has sent our team around the country helping companies breath new life into their existing infrastructure. The steps they use are the same but instead of designing stuff, we change things. Work with an expert, start cutting stuff out, breath new life into the growing old hardware, avoid fear and “cool factor” based choices, and verify everything.

Take a look and understand the output from your solvers, there is a lot of data in there. As an example, here is an article we wrote describing some of those hidden gems within your numerical simulation outputs. https://www.padtinc.com/blog/the-focus/ansys-mechanical-io-bound-cpu-bound

Play with things, see what helps and what hurts. It may be time to bring in an outside expert to look at things with fresh eyes.

Do not be afraid to push back against what IT is suggesting, unless you are very fortunate, they probably don’t have the same understanding as you do when it comes to numerical simulation computing. They care about security and minimizing the cost of maintaining systems. They may not be risk takers and they don’t like non-standard solutions. All of these can often result in a system that is configured for IT, and not fast numerical simulation solves. You may have to bring in senior management to solve this issue.

PADT is Here to Help

The easiest way to avoid all of this is to simply purchase your HPC hardware from PADT. We know simulation, we know HPC, and we can translate between engineers and IT. This is simply because simulation is what we do, and have done since 1994. We can configure the right system to meet your needs, at that point on the price performance curve you want. Our CUBE systems also come preloaded and tested with your simulation software, so you don’t have to worry about getting things to work once the hardware shows up.

The easiest way to avoid all of this is to simply purchase your HPC hardware from PADT. We know simulation, we know HPC, and we can translate between engineers and IT. This is simply because simulation is what we do, and have done since 1994. We can configure the right system to meet your needs, at that point on the price performance curve you want. Our CUBE systems also come preloaded and tested with your simulation software, so you don’t have to worry about getting things to work once the hardware shows up.

Learn more on our HPC Server and Cluster Performance Tuning page, or by contacting us. We would love to help out. It is what we like to do and we are good at it.