A Little Project Background

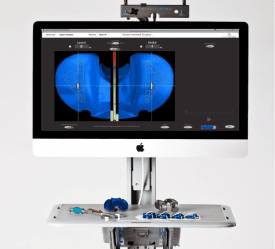

Recently I’ve been working on developing a computer vision system for a long standing customer. We are developing software that enables them to use computers to “see” where a particular object is space, and accurately determine its precise location with respect to the camera. From that information, they can do all kinds of useful things.

In order to figure out where something is in 3D space from a 2D image you have to perform what is commonly referred to as pose estimation. It’s a highly interesting problem by itself, but it’s not something I want to focus on in detail here. If you are interested in obtaining more information, you can Google pose estimation or PnP problems. There are, however, a couple of aspects of that problem that do pertain to this blog article. First, pose estimation is typically a nonlinear, iterative process. (Not all algorithms are iterative, but the ones I’m using are.) Second, like any algorithm, its output is dependent upon its input; namely, the accuracy of its pose estimate is dependent upon the accuracy of the upstream image processing techniques. Whatever error happens upstream of this algorithm typically gets magnified as the algorithm processes the input.

The Problem I Wish to Solve

You might be wondering where we are going with HPC given all this talk about computer vision. It’s true that computer vision, especially image processing, is computationally intensive, but I’m not going to focus on that aspect. The problem I wanted to solve was this: Is there a particular kind of pattern that I can use as a target for the vision system such that the pose estimation is less sensitive to the input noise? In order to quantify “less sensitive” I needed to do some statistics. Statistics is almost-math, but just a hair shy. You can translate that statement as: My brain neither likes nor speaks statistics… (The probability of me not understanding statistical jargon is statistically significant. I took a p-test in a cup to figure that out…) At any rate, one thing that ALL statistics requires is a data set. A big data set. Making big data sets sounds like an HPC problem, and hence it was time to roll my own HPC.

The Toolbox and the Solution

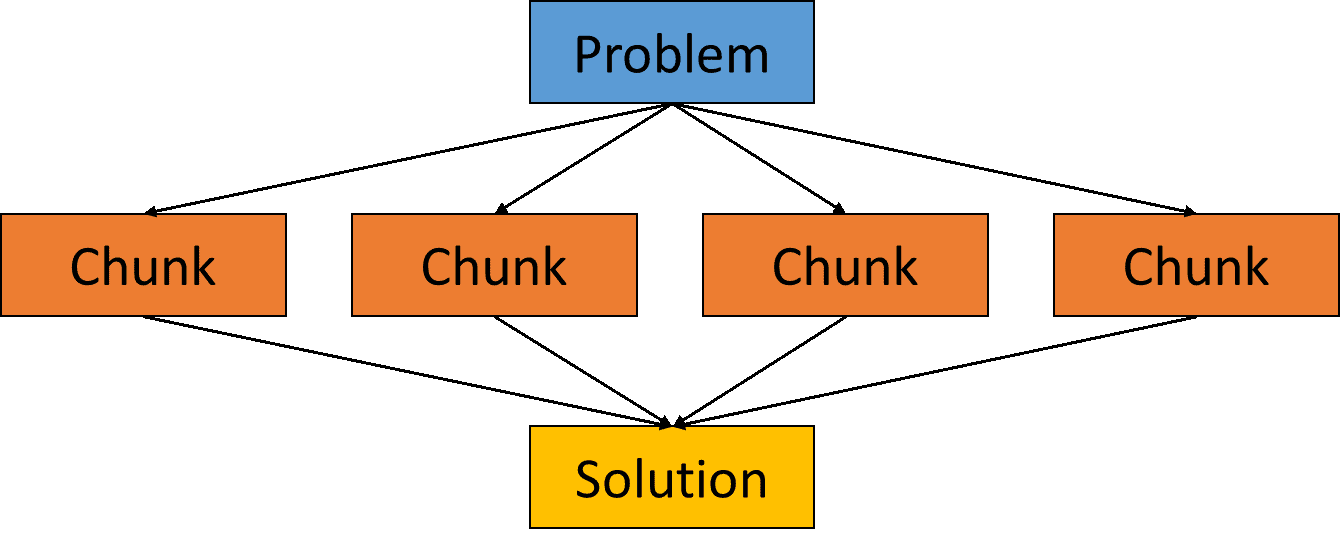

My problem reduced down to a classic Monte Carlo type simulation. This particular type of problem maps very nicely onto a parallel processing paradigm known as Map-Reduce. The concept is shown below:

The idea is pretty simple. You break the problem into chunks and you “Map” those chunks onto available processors. The processors do some work and then you “Reduce” the solution from each chunk into a single answer. This algorithm is recursive. That is, any single “Chunk” can itself become a new blue “Problem” that can be subdivided. As you can see, you can get explosive parallelism.

Now, there are tools that exist for this kind of thing. Hadoop is one such tool. I’m sure it is vastly superior to what I ended up using and implementing. However, I didn’t want to invest at this time in learning a specialized tool for this particular problem. I wanted to investigate a lower level tool on which this type of solution can be built. The tool I chose was node.js (www.nodejs.org).

I’m finding Node to be an awesome tool for hooking computers together in new and novel ways. It acts kind of like the post office in that you can send letters and messages and get letters and messages all while going about your normal day. It handles all of the coordinating and transporting. It basically sends out a helpful postman who taps you on the shoulder and says, “Hey, here’s a letter”. You are expected to do something (quickly) and maybe send back a letter to the original sender or someone else. More specifically, node turns everything that a computer can do into a “tap on the shoulder”, or an event. Things like: “Hey, go read this file for me.”, turns into, “OK. I’m happy to do that. I tell you what, I’ll tap you on the shoulder when I’m done. No need to wait for me.” So, now, instead of twiddling your thumbs while the computer spins up the harddrive, finds the file and reads it, you get to go do something else you need to do. As you can imagine, this is a really awesome way of doing things when stuff like network latency, hard drives spinning and little child processes that are doing useful work are all chewing up valuable time. Time that you could be using getting someone else started on some useful work. Also, like all children, these little helpful child processes that are doing real work never seem to take the same time to do the same task twice. However, simply being notified when they are done allows the coordinator to move on to other children. Think of a teacher in a class room. Everyone is doing work, but not at the same pace. Imagine if the teacher could only focus on one child at a time until that child fully finished. Nothing would ever get done!

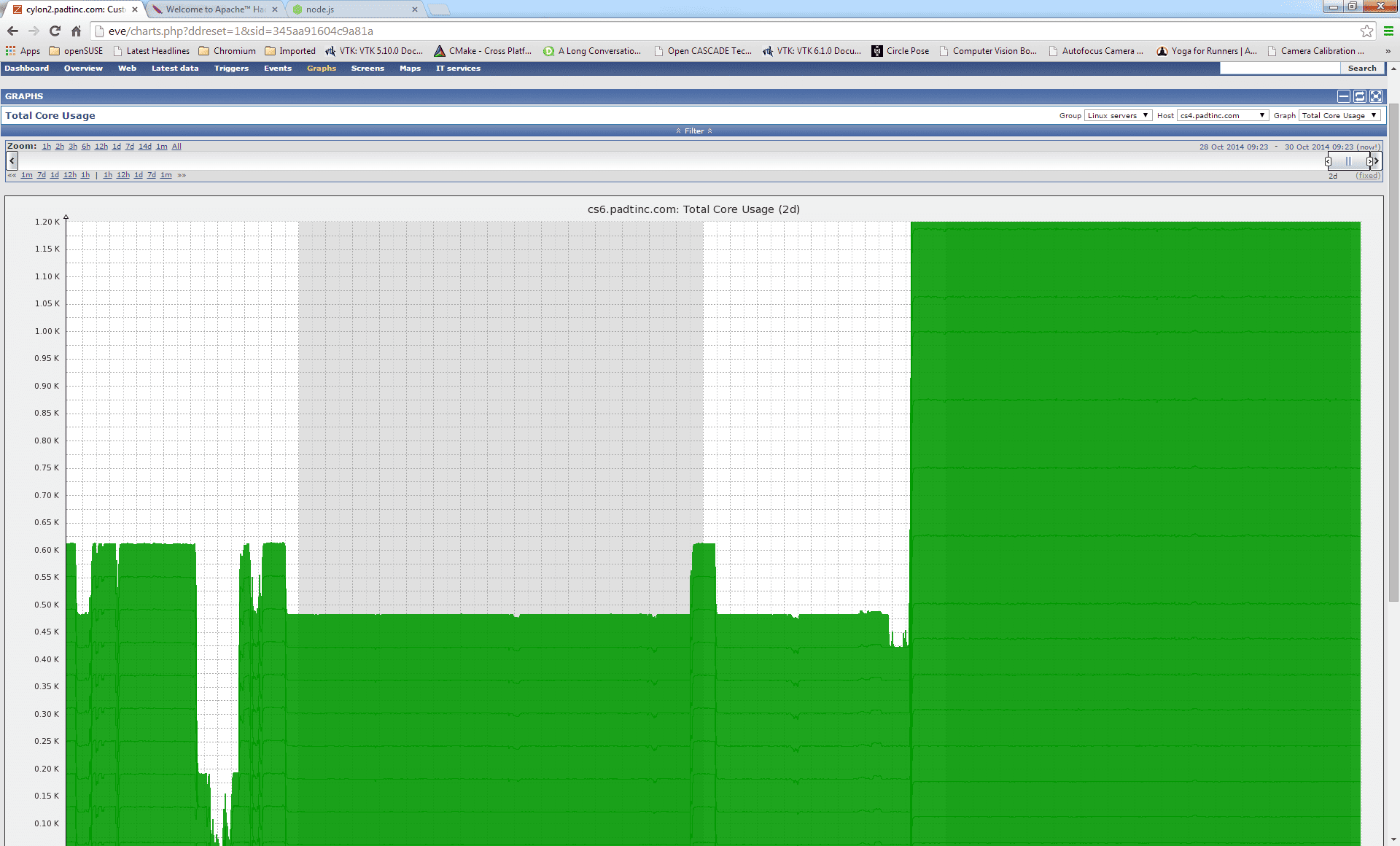

Here is a little graph of our internal cluster at PADT cranking away on my Monte Carlo simulation.

It’s probably impossible to read the axes, but that’s 1200+ cores cranking away. Now, here is the real kicker. All of the machines have an instance of node running on them, but one machine is coordinating the whole thing. The CPU on the master node barely nudges above idle. That is, this computer can manage and distribute all this work by barely lifting a finger.

Conclusion

There are a couple of things I want to draw your attention to as I wrap this up.

- CUBE systems aren’t only useful for CAE simulation HPC! They can be used for a wide range of HPC needs.

- PADT has a great deal of experience in software development both within the CAE ecosystem and outside of this ecosystem. This is one of the more enjoyable aspects of my job in particular.

- Learning new things is a blast and can have benefit in other aspects of life. Thinking about how to structure a problem as a series of events rather than a sequential series of steps has been very enlightening. In more ways than one, it is also why this blog article exists. My Monte Carlo simulator is running right now. I’m waiting on it to finish. My natural tendency is to busy wait. That is, spin brain cycles watching the CPU graph or the status counter tick down. However, in the time I’ve taken to write this article, my simulator has proceeded in parallel to my effort by eight steps. Each step represents generating and reducing a sample of 500,000,000 pose estimates! That is over 4 billion pose estimates in a little under an hour. I’ve managed to write 1,167 words…